Python automated testing 101¶

by Roman Podoliaka (roman.podoliaka@gmail.com)

Kharkiv, May the 12th of 2016

Why automate your test cases?¶

(open source) projects do not necessarily have dedicated QA engineers

projects are (usually) complex and time of engineers is precious

regression testing

test driven development

Automated testing¶

Test cases are usually written by software engineers:

Unit (individual functions or classes)

Integration (modules or logical subsystems)

Test cases are usually written by QA engineers:

Functional (checking a program against design specifications)

System (checking a program against system requirements)

Case study: binary search¶

def binary_search(arr, key):

return _binary_search(arr, key, 0, len(arr))

def _binary_search(arr, key, left, right):

if left >= right:

return

middle = left + int((right - left) / 2)

if arr[middle] == key:

return middle

elif arr[middle] < key:

return _binary_search(arr, key, left, middle)

else:

return _binary_search(arr, key, middle, right)

A test case example¶

import unittest

class TestBinarySearch(unittest.TestCase):

def setUp(self):

super().setUp()

self.empty = []

self.arr = list(range(10))

def test_empty_arr(self):

self.assertIsNone(binary_search(self.empty, 42))

def test_key_not_found(self):

self.assertIsNone(binary_search(self.arr, 42))

suite = unittest.TestSuite()

suite.addTests([TestBinarySearch('test_empty_arr'),

TestBinarySearch('test_key_not_found')])

unittest.TextTestRunner().run(suite)

.. ---------------------------------------------------------------------- Ran 2 tests in 0.003s OK

<unittest.runner.TextTestResult run=2 errors=0 failures=0>

(.venv3)Romans-Air:code malor$ py.test -v binary.py

== test session starts

platform darwin -- Python 3.4.3, pytest-2.9.1, py-1.4.30, pluggy-0.3.1 -- /Users/malor/.venv3/bin/python3.4

cachedir: .cache

rootdir: /Users/malor/Dropbox/talks/autotesting/code, inifile:

collected 2 items

binary.py::TestBinarySearch::test_empty_arr PASSED

binary.py::TestBinarySearch::test_key_not_found PASSED

== 2 passed in 0.05 seconds

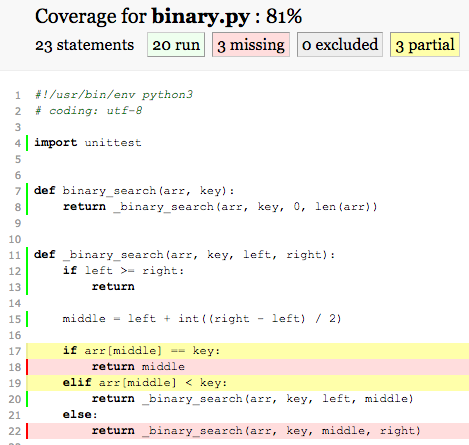

coverage: measuring of test coverage¶

coverage provides reporting on what lines of code have actually been executed

allows you to understand which parts of the code are covered by tests and which are not

test coverage is a measurable and comparable metric: a high test coverage value is required (but not enough) to make sure code works as expected

(.venv3)Romans-Air:code malor$ coverage report -m

Name Stmts Miss Branch BrPart Cover Missing

-------------------------------------------------------

binary.py 23 3 8 3 81% 18, 22, 40, 17->18, 19->22, 39->40

[tox]

envlist = pep8,py27,py35

[testenv]

deps = pytest

pytest-cov

commands = py.test binary.py --cov --cov-append

[testenv:pep8]

deps = flake8

commands = flake8 binary.py

(.venv3)Romans-Air:code malor$ tox

___________________________________________________________________ summary

pep8: commands succeeded

py27: commands succeeded

py35: commands succeeded

congratulations :)

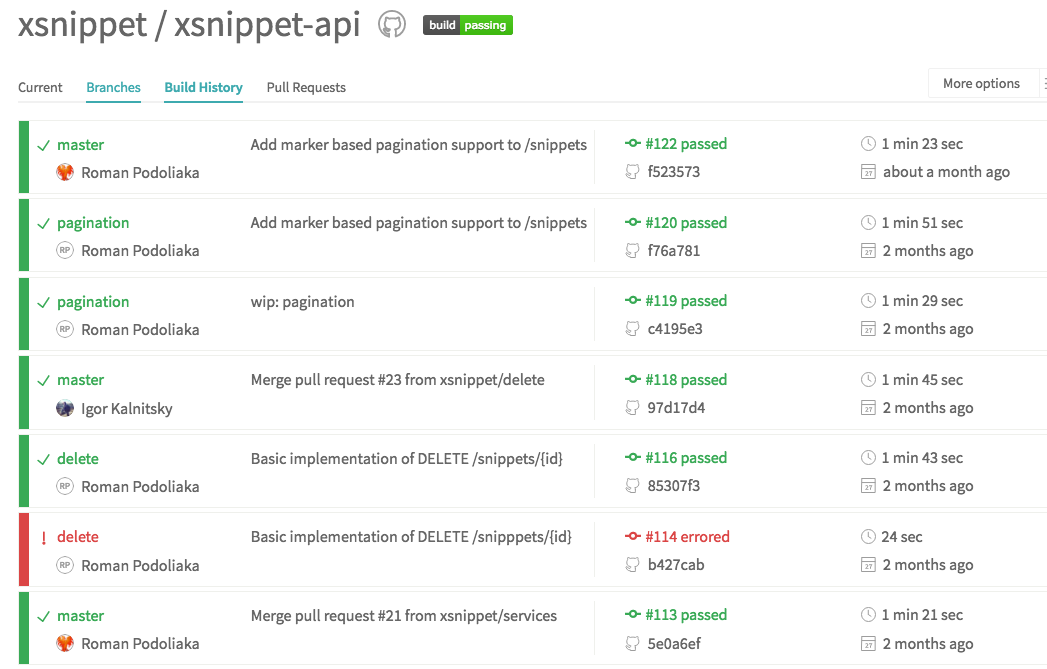

CI (continuous integration)¶

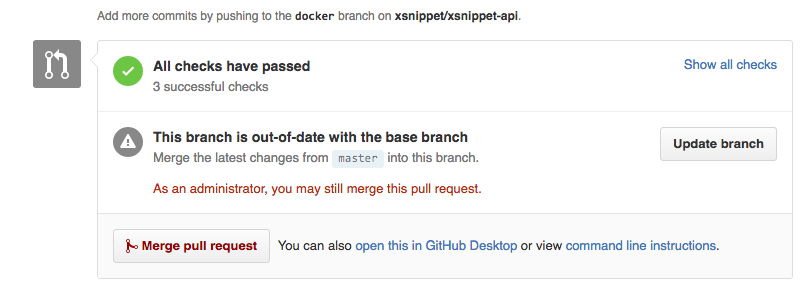

testing is only efficient, if it's enforced (e.g. so that one can't "forget" to run the tests)

CI is intended to automate all the required build steps, including testing

CI can (and should) be run in "gating" mode: a commit can not be merged, if tests fail

Travis CI¶

a third-party CI system, that is easily integrated with GitHub

free of charge for open source projects

declarative configuration stored in a repo

sudo: false

language: python

python: 3.5

services:

- mongodb

install:

# greenlet is needed to submit concurrency=greenlet based coverage

# to coveralls.io service

- pip install tox coveralls greenlet

script:

- tox

after_success:

- coveralls

notifications:

email: false

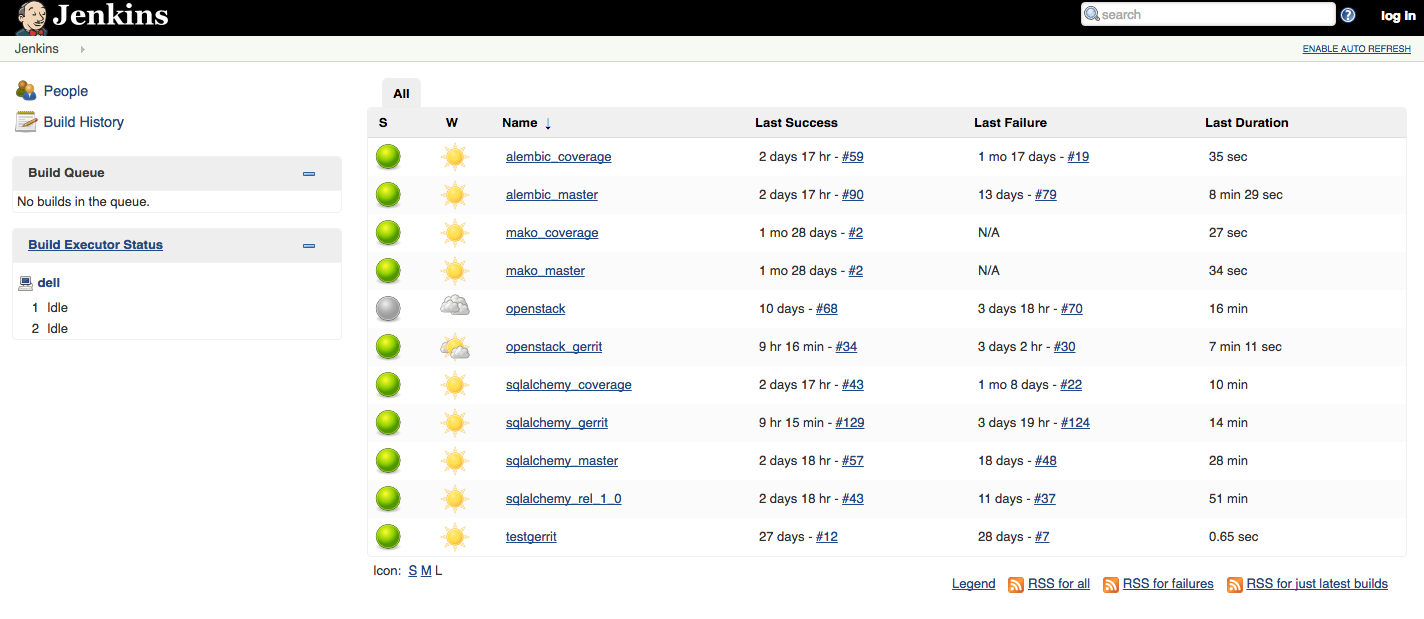

Jenkins¶

a comprehensive solution for CI/CD

a number of plugins for automation of builds steps

number #1 choice when you need a custom CI

can be configured declaratively by the means of Jenkins Job Builder

<?xml version='1.0' encoding='UTF-8'?>

<project>

<actions/>

<description></description>

<logRotator>

<daysToKeep>7</daysToKeep>

<numToKeep>-1</numToKeep>

<artifactDaysToKeep>-1</artifactDaysToKeep>

<artifactNumToKeep>-1</artifactNumToKeep>

</logRotator>

<keepDependencies>false</keepDependencies>

<properties/>

<scm class="hudson.scm.NullSCM"/>

<canRoam>true</canRoam>

<disabled>false</disabled>

<blockBuildWhenDownstreamBuilding>false</blockBuildWhenDownstreamBuilding>

<blockBuildWhenUpstreamBuilding>false</blockBuildWhenUpstreamBuilding>

<triggers class="vector"/>

<concurrentBuild>false</concurrentBuild>

<builders>

<hudson.tasks.Shell>

<command># Move into the jenkins directory

cd /var/lib/jenkins

#Add all top level xml files.

git add *.xml

# Add all job config.xml files.

git add jobs/*/config.xml

# Add all user config.xml files.

git add users/*/config.xml

# Add all user content files.

git add userContent/*

# Remove files from the remote repo that have been removed locally.

COUNT=`git log --pretty=format: --name-only --diff-filter=B | wc -l`

if [ $COUNT -ne 0 ]

then git log --pretty=format: --name-only --diff-filter=B | xargs git rm

fi

# Commit the differences

git commit -a -m "Automated commit of jenkins chaos"

# Push the commit up to the remote repository.

git push origin master

</command>

</hudson.tasks.Shell>

</builders>

<publishers/>

<buildWrappers/>

</project>

- common-sync-params: &common-sync-params

# Branches to sync (see also short names below)

upstream-branch: 'stable/mitaka'

downstream-branch: '9.0/mitaka'

fallback-branch: 'master'

# Branch short names for jobs naming

src-branch: mitaka

dst-branch: 9.0

# Syncronization schedule

sync-schedule: 'H 3 * * *' # every day at 3:XX am, spread evenly

# Gerrit parameters

gerrit-host: 'review.fuel-infra.org'

gerrit-port: '29418'

gerrit-user: 'openstack-ci-mirrorer-jenkins'

gerrit-creds: 'a4be8c41-43e0-4269-94d8-53133cfe3ae5'

gerrit-topic: 'sync/stable/mitaka'

name: 'sync-{name}-{src-branch}-{dst-branch}'

jobs:

- 'sync-{name}-{src-branch}-{dst-branch}'

Useful links¶

Travis CI - CI as a service, that is easily integrated with GitHub

Test Driven Development - a book on TDD by Kent Beck

Understanding the OpenStack CI System - a blog post on OpenStack CI internals by Jay Pipes

Summary¶

“If it’s not tested, it’s broken.” (Monty Taylor)

test coverage is an important metric, but it's not the only one - use common sense!

writing tests is not always fun, but it makes your life easier

Travis CI is a good start, but sometimes a more complex or just custom configuration is needed - this is where Jenkins shines

Thank you!

Slides: http://podoliaka.org/talks/

Your feedback is welcome: @rpodoliaka or roman.podoliaka@gmail.com